Introduction

Advanced machine learning models have improved geospatial data analysis over the past decades. Many geospatial researchers have successfully applied machine learning models for land cover mapping and modeling studies. To date, many geospatial researchers use a model-centric approach that focuses on improving machine learning algorithms applied to benchmark geospatial datasets. While improving machine learning algorithms is good, significant challenges remain. For example, limited and imbalanced training data, inadequate model evaluation measures, and lack of explainability affect most machine learning methods. In addition, researchers rarely communicate information on the quality of training data and how models make predictive decisions. Following is a brief overview of some of these challenges.

Limited and imbalanced training data

Standard machine learning models assume that training data is adequate and balanced. However, balanced training data is scarce in real-world geospatial applications. In most cases, machine learning algorithms fail, given that there is an under-representation or over-representation of certain classes in the training data. Although various balancing data techniques are available, they do not work in most cases, especially for underrepresented data and severely skewed class distributions. In general, balancing data techniques only superficially increases the model accuracy. This introduces the risk of producing high model accuracy but inaccurate and misleading predictions.

Inadequate model evaluation measures

In general, the performance of a machine learning model is based on the accuracy of test data using cross-validation techniques. However, the high accuracy of the test data does not provide enough confidence in the performance of a machine learning model because of inadequate balanced training data. Therefore, geospatial researchers need to rigorously develop better evaluation measures to quantify the performance of machine learning models. A robust model evaluation will allow a credible use of machine learning models in geospatial applications.

Lack of explainability inhibits trust in machine learning models

For some time, many researchers assumed that increasing machine learning model complexity would solve the problem of limited and imbalanced training data. Although expanding the machine learning complexity improves the overall accuracy, it is challenging to interpret the models. For instance, it is difficult to explain the whole forest in an RF model (compared to single decision trees) or understand high dimensionality and data transformations in SVM models. Understanding why a machine learning model makes a prediction is critical since it provides new insights (whether the model is good or bad).

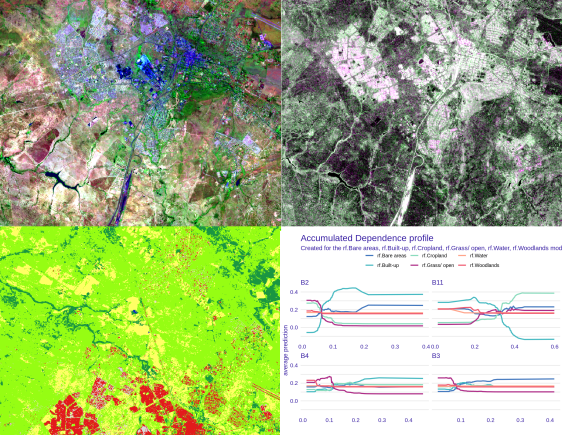

Towards data-centric explainable machine learning

During the last decade, there has been a proliferation of advanced machine learning models that are highly predictive. Geospatial researchers and scientists use these advanced machine learning models to solve some pressing climate change challenges. However, most advanced machine learning models are opaque and difficult to interpret and explain. Therefore, it has become more critical to understand and explain how machine learning models work. Some experts and researchers have called on the machine learning community to embrace a data-centric explainable machine learning approach. A data-centric explainable machine learning approach focuses on improving the quality of training data and explaining how the models work. To improve the accuracy of machine learning models, geospatial researchers should acquire quality training data and check the models using explainable machine learning techniques in an iterative process of model development.

Next Steps

There are some books on explainable and interpretable machine learning methods (https://ema.drwhy.ai/preface.html; https://christophm.github.io/interpretable-ml-book/index.html). Interested readers should refer to these books. I have published a book on data-centric explainable machine learning methods. If you want to learn more about the book, please check the information below:

Data-centric Explainable Machine Learning