Introduction

Machine learning models are powerful tools for geospatial data analysis. During past decades, geospatial researchers and analysts have successfully used machine learning models to solve environmental and climate change challenges. However, most geospatial researchers have focused on training and optimizing machine learning models. Geospatial researchers generally use performance measures such as accuracy or error to choose the best model. Yet, most geospatial researchers do not understand how and why machine learning models make the predictions.

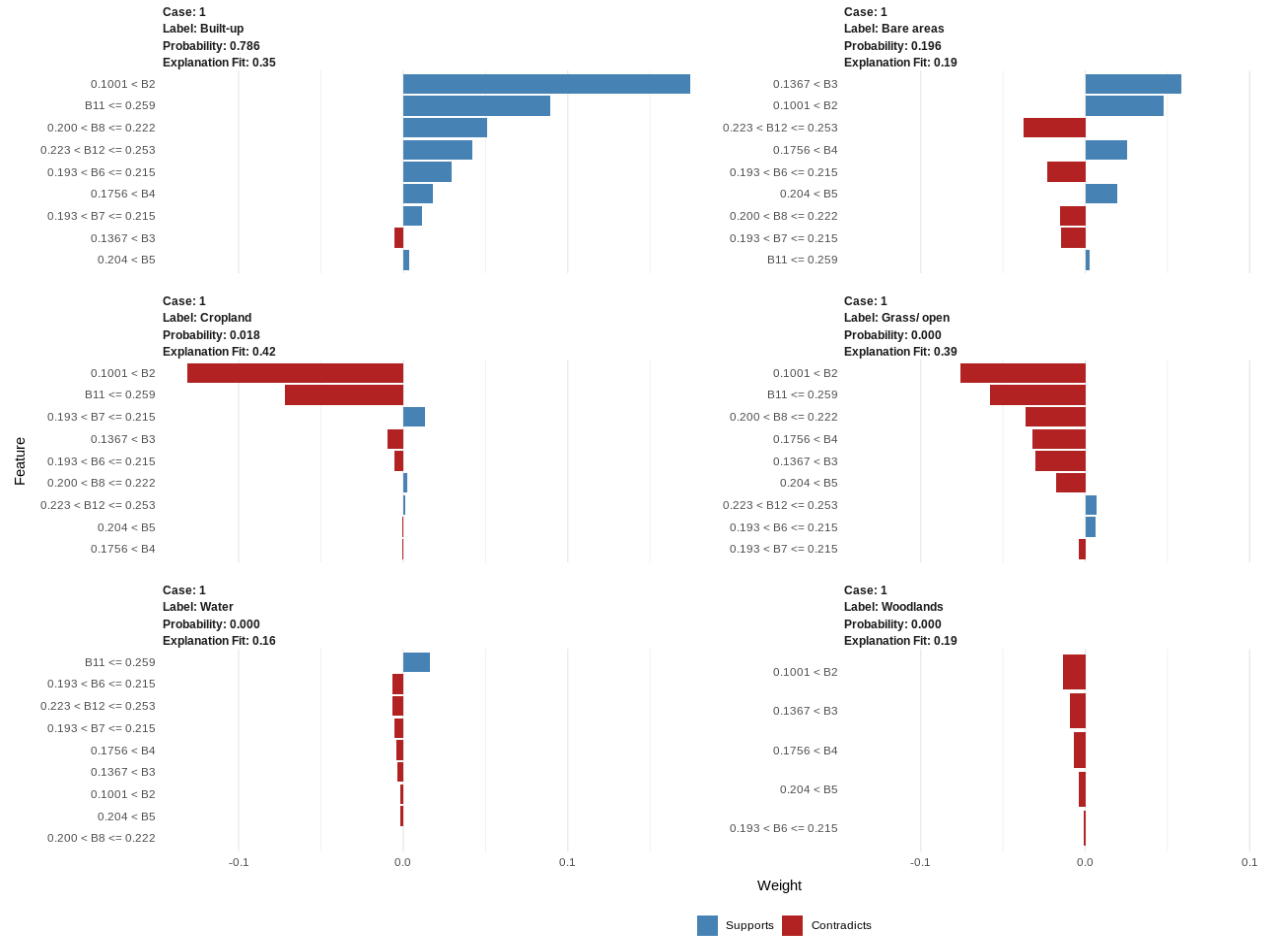

Recently, machine learning experts and data scientists have emphasized understanding machine learning models to reduce bias, improve quality and increase transparency. To date, some data scientists are using explainable machine learning methods to get insights into machine learning models. These methods explain a model’s predictions post-hoc without necessarily revealing how it works. While explainable machine learning methods are merely approximations, they help us understand how machine learning models predict the outcomes. This blog post will explore the Local Interpretable Model-agnostic Explanations (LIME) to help understand how a random forest model predicted land cover.

Local Interpretable Model-agnostic Explanations (LIME)

Ribeiro et al. (2016) developed LIME to explain the predictions of any classifier. This method allows us to get an approximate understanding of which features contributed to a single instance’s classification and which features contradicted it. In addition, we can also understand how the features influenced the prediction. LIME also produces easy-to-understand graphical visualizations, which help researchers and analysts to explain complex models in a user-friendly and straightforward manner.

While LIME can explain any supervised classification or regression model, it assumes that every complex model is linear on a local scale. Therefore, it is possible to fit a simple model around a single instance (observation) based on the original input features. In general, explanations are not given globally for the entire machine learning model but locally and for every instance separately.

The LIME package comprises lime() and lime.explainer() functions. The lime() function creates an “explainer” object that contains the machine learning model and the feature distributions for the training data. The explain() function predicts and evaluates explanations.

Next Steps

Readers can access the blog tutorial and download sentinel-2 and training data in the links below.

There are many resources to learn more about LIME. The links and resources below can help you to get started. You can also check my book in the link below to learn about other explainable machine learning methods.

Data-centric Explainable Machine Learning” class

Links and Resources

- Ribeiro MT, Singh S, Guestrin C. (2016). “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16). ACM, New York, NY, USA, 1135-1144. DOI: https://doi.org/10.1145/2939672.2939778

- R package for LIME: https://github.com/thomasp85/lime

- Open-source Python code for LIME: https://github.com/marcotcr/lime